Or more accurately, scoring high on my most recent pharm exam spells relief. I mentioned in a previous post how I walked out of the first pharm exam of the semester worried whether I had passed or not. I was right to worry. I did pass, but barely. And that honestly made me very nervous. With the way PA programs are setup, you can’t afford to fail a class. There’s no “make it up next semester”. Generally if you fail a class, you’re out. Some might allow a make-up exam or some form of remediation if you’re just on the cusp, but most don’t.

And I honestly find pharm the hardest of my classes. This is not because of how it’s taught or anything, but because it’s mostly rote memorization. Often in other classes, you can reason out the answer from first principals. But less so with pharm. It was my lowest grade last semester. So I was nervous if the trend continued I’d be in real trouble.

Now, back in my days at RPI I let my ego get in the way. I mean why not? I was one of the highest ranked students in my high school. Obviously I was smart. So if I had trouble in my classes at RPI, I didn’t need help. I could figure it out on my own. But the truth was, I couldn’t and didn’t. It wasn’t until I started asking for help more that I did better. When I started my journey to PA school, I vowed I’d ask for help when I needed it. And I have been. So, with hat in hand, I emailed the course director on my campus. As I mentioned in a post late last year, any time we fail a grade this is required. But if we pass, even if barely, it’s not required. So I could have let my ego get in the way and simply tried to tough it out. I’m glad I didn’t.

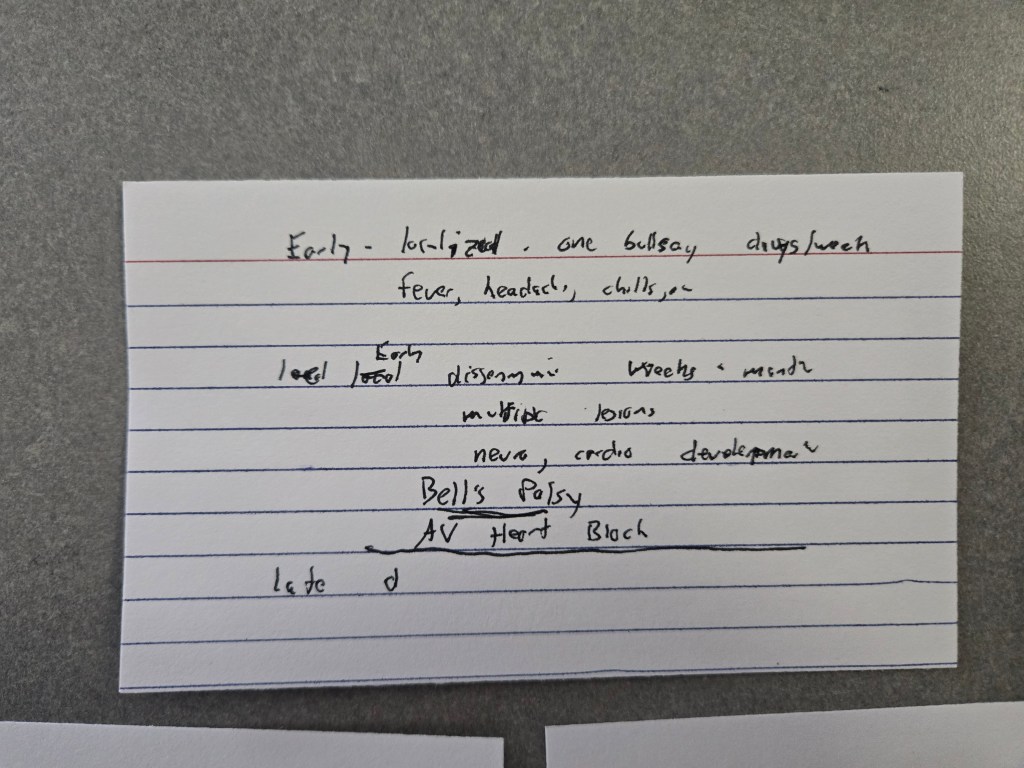

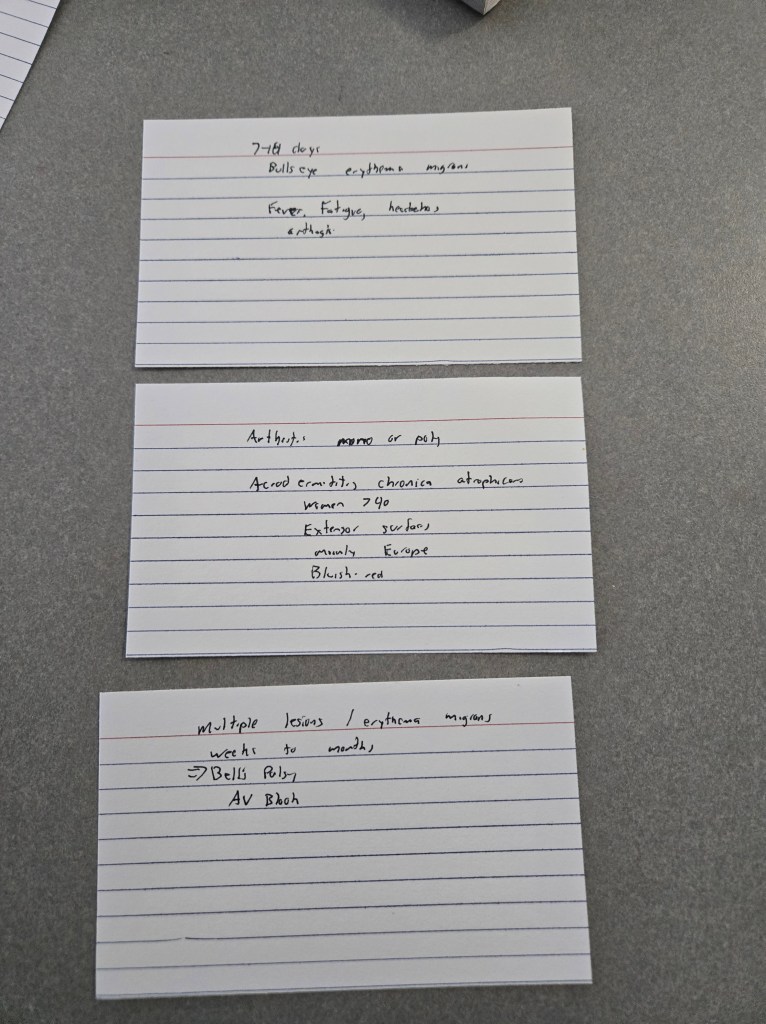

Now I’d like to say there was some breakthrough here and she gave me the key piece of advice. But it’s not quite that simple. We did talk about what I got wrong and why and how to approach the upcoming exam. She gave me a lot of reassurance. And yes, she did give me an idea or two to help with my study habits. We then met again last Friday which helped reinforce some of this. The one real study habit I changed was how I built my flashcards and studied. Instead of focusing so much on drug names and then things like indications, side effects, etc. I focused on certain keywords such as specific contraindications and side effects and then worked back to the drug name. I also for some drugs built up little mnemonics or memory palaces.

For example, one mnemonic I built was to remember that Benznidazole is for Chagas aka “kissing disease” and that Ben would kiss someone and get weak in the knees/bone (bone marrow depression is an adverse effect) and makes him all tingly in the fingers (peripheral neuropathy is another adverse effect) and that if Ben kisses too much he could get someone pregnant (one of the tests you want to give before prescribing it.)

And it worked. While taking the test there were a number of times while reading the stem, I felt confident of the answer even before looking at the possible choices. This is always a good sign. There were a few I definitely had to think about a lot. And honestly, one or two I simply guessed at. But, in the end, I ended up with my second highest exam grade so far this semester. It salvaged my Pharm grade enough to the point where even if I barely pass the final two exams in the class, I’d pass it. Technically I could just barely fail both and still pass. Not that I plan on that.

But the relief I feel, is very hard to describe. I’ve sort of been on a high from it for a few days.

I’ll take that.